Here is my PowerBI Query:

BaseUrl = "https://******************.timehub.7pace.com/api/odata/v3.3-beta/workItemsHierarchyAllLevels?",

WorkItemsFilter = "System_TeamProject eq '******************'",

RollupFields = "Microsoft_VSTS_Scheduling_OriginalEstimate,Microsoft_VSTS_Scheduling_RemainingWork,Microsoft_VSTS_Scheduling_Effort",

SelectFields = "Parent,System_Id,System_Title,System_WorkItemType,System_AreaPath,System_State,TrackedTotal,RollupValue1,RollupValue2,RollupValue3,Microsoft_VSTS_Common_ClosedDate,Microsoft_VSTS_Scheduling_StartDate",

FilterConditions = "System_WorkItemType eq 'Feature' and (Microsoft_VSTS_Common_ClosedDate eq null or Microsoft_VSTS_Common_ClosedDate ge 2023-01-01)",

ExpandFields = "Parent($select=System_Title)",

Source = OData.Feed(BaseUrl

& "workItemsFilter=" & WorkItemsFilter

& "&rollupFields=" & RollupFields

& "&$select=" & SelectFields

& "&$filter=" & FilterConditions

& "&$expand=" & ExpandFields,

null

)

This was working fine but it is not working anymore. It actually seems to work once in a while but not constantly... I don't really have a pattern.

Here is the error message I get in PowerBI:

DataSource.Error: Microsoft.Mashup.Engine1.Library.Resources.HttpResource: Request failed:

OData Version: 3 and 4, Error: The remote server returned an error: (500) Internal Server Error.

OData Version: 4, Error: The remote server returned an error: (500) Internal Server Error.

OData Version: 3, Error: The remote server returned an error: (404) Not Found.

Details:

DataSourceKind=OData

DataSourcePath=https://******************'.timehub.7pace.com/api/odata/v3.3-beta/workItemsHierarchyAllLevels

-

Hi,

I have the same problem with loading data from workItemsHierarchyAllLevels endpoint. I load data from 7paceTimeTracker to ADF table. In ADF there are pipelines which load data from 7paceTimeTracker with Odata query to OData resource dataset. It was working fine untill last week (it was working good from over a one year and transfering data from 2 years ago). No changes with parameters or definition of the pipeline, or with oData query were made. Last week the pipeline just has started to fail with error:

Operation on target Execute Pipeline1 failed: Operation on target copy hierarchy to stage failed: ErrorCode=ODataRequestNotSucceeded,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Requesting response from path workItemsHierarchyAllLevels and query customFields=Custom.FeatureWewZew&workItemsFilter=%28System_TeamProject%20eq%20%27********%27%29%20

or%20%28System_TeamProject%20eq%20%27********%20development%27%29%20

or%20%28System_TeamProject%20eq%20%27********%27%29%20%20

or%20%28System_TeamProject%20eq%20%27********%27%29%20

or%20%28System_TeamProject%20eq%20%27********%27%29%20

or%20%28System_TeamProject%20eq%20%27********%27%29%20

or%20%28System_TeamProject%20eq%20%27********%27%29%20

or%20%28System_TeamProject%20eq%20%27********%27%29 failed with status code InternalServerError and message {"statusCode":500,"errorCode":"TimeoutExpired","errorDescription":"This query is taking too long to execute. Please follow these recommendations to make it run faster: https://www.7pace.com/redir/proper-odata-usage"}.,Source=Microsoft.DataTransfer.Runtime.ODataConnector,'

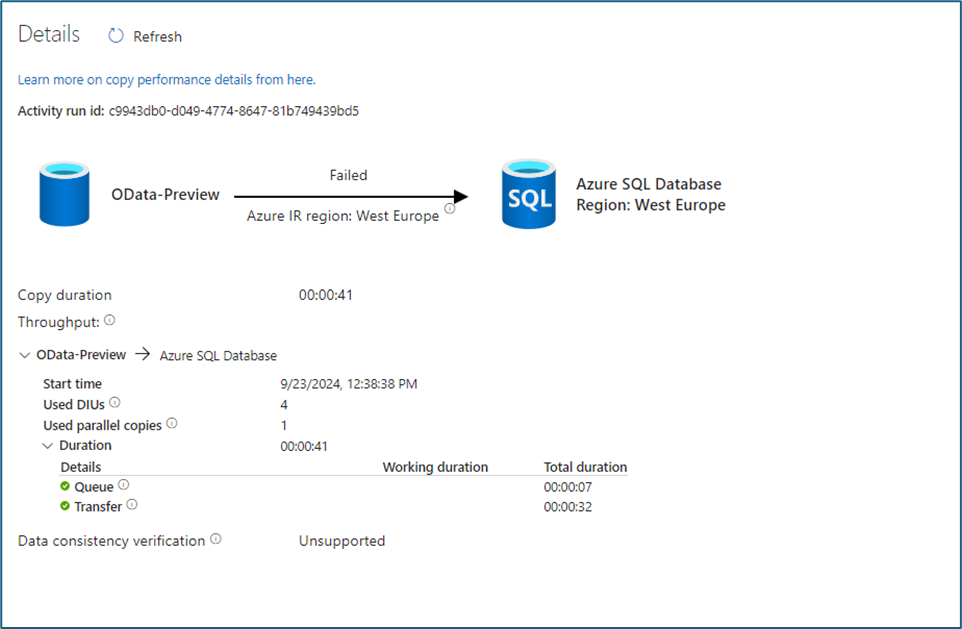

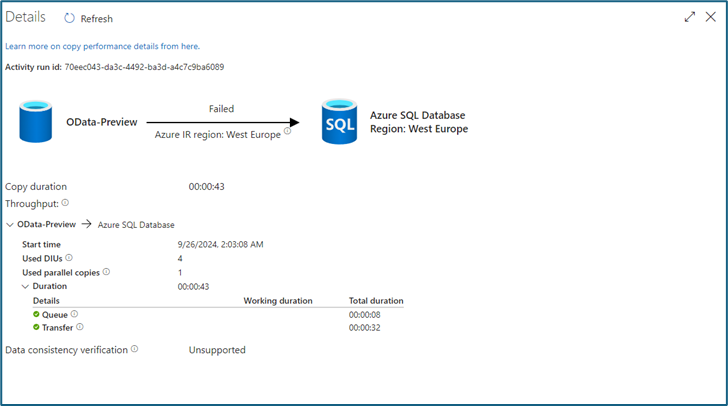

In the details of pipeline's run we can see that the transfer time is only 32 sec , so it looks like pipeline has problem with getting to 7PaceTimeTraceker server and with getting response.

Do you have any idea when it will be possible to get to server via API to load data to ADF?

-

Hi Vanja,

Thanks for reply.

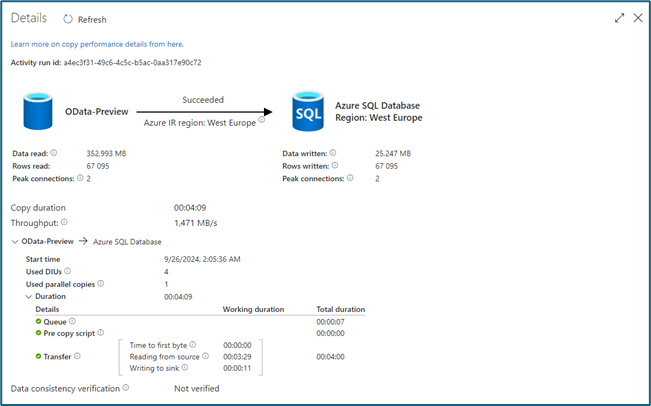

In original query workItemsHierarchyAllLevels endpoint we had no period limitation (it transfers about 67 000 records, so it is not a super big dataset). Transferring data from the workItemsHierarchyAllLevels endpoint in this formula worked fine until last week. After your response we have added date limitation for workItemsHierarchyAllLevels endpoints query: “&WorkLogsFilter=Timestamp ge 2023-01-01 and Timestamp le 2024-09-30”. And we have tested different cases, for example: last year, half a year, only one month etc. And our conclusion is:

- It does’t matter how long period limitation is set in the query – copying data to ADF table sometimes runs, sometimes not

- When the period limitation was set in the query, we thought that it helped, but unfortunately no…. sometimes the pipeline is connecting to 7paceTimeTracker server, sometimes not (but in most cases it does).

- According to your documentation we also removed fields: "TrackedItself", "TrackedTotal", "TrackedItselfBillable", "TrackedTotalBillable" from workItemsHierarchyAllLevels endpoints query, but there was still problem with response from 7paceTimeTracker server

- When the pipeline ends successfully time to first byte is about 1 sec ( so 7paceTimeTracker server responds very quickly) and when it ends with failure the 7paceTimeTracker server doesn’t even respond at all and timeout is after about 30 sec. It really looks like 7paceTimeTracker server sometimes has problem to respond and there is no rule on what it depends on

And the question is: what really does the period limitation in workItemsHierarchyAllLevels endpoints query? – because if the query hasn’t set period limitation it is transferring all the 67 000 rows, and if it has – it is transferring the same number of rows (doesn’t matter if we take one year period or only one month). It seems like this period limitation doesn’t have impact to the amount of transferring dataset from workItemsHierarchyAllLevels endpoint

In all these cases error is still the same: “InternalServerError and message {"statusCode":500,"errorCode":"TimeoutExpired"……”

-

Hi Karolina,

As I wrote in my previous reply, the workItemsHierarchyAllLevels endpoint is very load heavy, and querying 2 years worth of data can result in timeouts, and using it without a time period will most certainly result in timeouts since it will pull all of your work items along with their Timetracker worklogs and perform calculations along the hierarchy. I would suggest reducing the time period for which you are querying data to at least 3 months.

And if you consistently want the same data but only the updates, you can configure incremental refresh to only fetch changes in data, meaning new and edited (or deleted) worklogs.

Since I don't see your exact query I cannot suggest specific changes, but you should utilize our custom made prefilters which preload data and then fetch it - workitemsFilter and the worklogsFilter. This is in case you are using the default OData $filter.

Best regards,

Vanja -

Hi Vanja,

Ok, I understand we can limit time period in our workItemsHierarchyAllLevels endpoint query, but we don’t understand why this time filter doesn’t reduce number of rows?

Case 1:

“select=System_Id,System_WorkItemType,System_TeamProject,System_Title,System_State,System_AreaPath,System_AssignedTo,Microsoft_VSTS_Scheduling_Effort,CustomStringField1

&customFields=Custom.FeatureWewZew

&workItemsFilter=(System_TeamProject eq 'PROJECT 1')

or (System_TeamProject eq 'PROJECT 2')

or (System_TeamProject eq 'PROJECT 3')

or (System_TeamProject eq ''PROJECT 4')

or (System_TeamProject eq 'PROJECT 5')

or (System_TeamProject eq 'PROJECT 6')

or (System_TeamProject eq 'PROJECT 7')

or (System_TeamProject eq 'PROJECT 8')

&WorkLogsFilter=Timestamp ge 2023-01-01 and Timestamp le 2024-09-30”

Result: 67 095 rows read and copied to ADF table

Case2:

“select=System_Id,System_WorkItemType,System_TeamProject,System_Title,System_State,System_AreaPath,System_AssignedTo,Microsoft_VSTS_Scheduling_Effort,CustomStringField1

&customFields=Custom.FeatureWewZew

&workItemsFilter=(System_TeamProject eq 'PROJECT 1')

or (System_TeamProject eq 'PROJECT 2')

or (System_TeamProject eq 'PROJECT 3')

or (System_TeamProject eq ''PROJECT 4')

or (System_TeamProject eq 'PROJECT 5')

or (System_TeamProject eq 'PROJECT 6')

or (System_TeamProject eq 'PROJECT 7')

or (System_TeamProject eq 'PROJECT 8')

&WorkLogsFilter=Timestamp ge 2024-09-01 and Timestamp le 2024-09-30”

Result: 67 095 rows read and copied to ADF table

Please sign in to leave a comment.

Comments

6 comments